Introduction

An appraisal method should provide insight into an organization's process capabilities by identifying strengths and weaknesses. By understanding its' weaknesses an organization can prioritize elements in an improvement initiative. It can also benchmark itself against others in similar industries and for similar size and complexity of support. A selected method must be replicatable by any organization reviewing the same information.

The goal of an ITIL assessment model is to measure how robust the ITIL processes, activities and operations are against a set of benchmarks. Once the ITIL publications had been written, they provided the guidelines necessary to establish rough comparative measures. Validating the current state of practice offers an occasion to:

- Establish the level of opportunity that may exist in terms of efficiency and effectiveness

- Identify risks that need to be managed

- Provide a basis for establishing priorities and focus

Assessment Frameworks

Pink Elephant

|

Pink Elephant was the first to create and produce a maturity model to underpin ITIL processes. The PinkScan™ was roughly based on the Carnegie-Mellon inspired Software Capability Maturity Model. Soon many others developed similar models.

The Pinkscan categories form a general outline of increasingly more sophisticated processes. There use of the PinkScan is described in their booklet "ITIL® Process Maturity, Self-Assessment & Action Plan". Each process is assessed as being in one of the five stages of maturity:

- Initiation - There are ad-hoc activities present, but we are not aware of how they

relate to each other within a single process”. Some policy statements may have been made, but, there are no documented objectives or plans and no dedicated resources or real commitment

- Awareness - Some staff may be aware of the process but some activities are still incomplete or inconsistent - there is no overall measuring or control. The process is driven by tool rather than defined separate from tool. Positions may have been created, but roles & responsibilities are poorly defined

- Control - The process is well defined, understood and implemented. Tasks, responsibilities and authorizations are well defined and communicated and targets for quality are set and results are measured. Comprehensive management reports are produced and discussed and formal planning is conducted

- Integration - Inputs from the process come from other well controlled processes and outputs from the process go to other well controlled processes. There is significant improvements in quality have been achieved and there is regular, formal communication between department heads working with different processes. Quality and performance metrics transferred between processes.

- Optimization - The process drives quality improvements and new business opportunities beyond the process. There are direct links to IT and corporate policy and there is evidence of innovation. Quality management and continuous improvement activities are embedded in the organization and performance measurements are indicative of “world class”.

|

The Pink Elephant Rossette

In its' major service offering the Pinkscan assesses each of the ten ITIL disciplines against these five levels according to an established set of criteria (they have a mini-scan which evaluates the five Service Management processes).

|

![[To top of Page]](../images/up.gif)

OGC Self-Assessment Questionnaire

The following scale was prepared by Stephen Kent of the UK Government, office of Government Commerce. The questions for each ITIL area are available by selecting the area from the list in the top right hand corner. The areas are presented as being ordinal. The organization must pass the lower item before it can progress to a higher category, and, each section has some MUST elements which the organization MUST have all in place to pass that section (indicated in bold, blue coloring on the items).

Self-Assessment Questionnaires - Excel Spreadsheets

Service Management (Incident, Problem, Change, Configuration, Release)

Service Delivery (Service Level, Availability, Capacity, Continuity, Finance)

Level 1: Prerequisites

ascertains whether the minimum level of prerequisite items are available to support the process activities.

Level 1.5: Management Intent

establishes whether there are organizational policy statements, business objectives (or similar evidence of intent) providing both purpose and guidance in the transformation or use of the prerequisite items.

At the lowest levels of the framework model, the questionnaire is written in generic terms regarding products and activities. At higher levels more specific ITIL terms are used, based on the assumption that Organizations’ achieving higher level scores are more likely to use the ITIL vocabulary.

Level 2: Process Capability

examines the activities being carried out. The questions are aimed at identifying whether a minimum set of activities are being performed.

Level 2.5: Internal Integration

seeks to ascertain whether the activities are integrated sufficiently in order to fulfill the process intent.

Level 3: Products

examines the actual output of the process to enquire whether all the relevant products are being produced.

Level 3.5: Quality Control

is concerned with the review and verification of the process output to ensure that it is in keeping with the quality intent.

Level 4: Management Information

is concerned with the governance of the process and ensuring that there is adequate and timely information produced from the process in order to support necessary management decisions.

Level 4.5: External Integration

examines whether all the external interfaces and relationships between the discrete process and other processes have been established within the organisation. At this level, for IT service management, use of full ITIL terminology may be expected.

Level 5: Customer Interface

is primarily concerned with the on-going external review and validation of the process to ensure that it remains optimized towards meeting the needs of the customer.

Note that the scales are ordinal - that is 5 is greater than 4 but not necessarily the same amount that 4 is greater than 3 (ie. ratio measurement)

[Note]. I have never seen a theoretical discussion or explanation for the why these dimensions form a scale. While it has merit due to its' simplicity, I would argue that each of these dimensions can be separately assessed according to how robust and mature the organization is on that dimension. Assessing true organizational maturity or capability is infinitely more complex than can be determined using these tools - they merely represent a convenience for the organization.

The aim of self-assessment is to give the assessing organization an idea as to how well it is performing relative to ITIL best practice. The questionnaire may also create awareness of management and control issues that may be addressed to improve overall process capability.

![[To top of Page]](../images/up.gif)

MOF Self-Assessment

Microsoft offers self assessment questions for each of its Microsoft Operation Framework (MOF) areas which includes ITIL disciplines. These can be accessed at http://www.microsoft.com/technet/. There are approximately 20 questions per area. While inferences can be drawn about deemed importance of particular practices and processes by their inclusion as a question, there is not discussion of relative weighting or priority. Moreover, no inference should be drawn between the relative weightings amongst the areas.

![[To top of Page]](../images/up.gif)

Schiesser - System Management

| Rich Schiesser, in Systems Management, offers a measurement scale for the robustness of various IT service processes.

| In my experience, many infrastructures do not attribute the same amount of importance to each of the 10 categories [characteristics of processes' maturity] within a process, just as they do not all associate the same degree of value to each of the 12 systems management processes. I refined the assessment worksheet to account for this uneven distribution of category significance - I allowed weights to be assigned to each of the categories. The weights range from 1 for least important to 5 for most important, with a default of 3.

Schiesser, Systems Management, Chapter 8, p. xx

| |

The ten process characteristics cited by Schiesser are the degree to which:

- the executive sponsor shows support for the process with specific supportive actions

- the process owner exhibit desirable traits and ensure timely and accurate processes of activities

- key customers are involved in the design and use of the process, including how availability metrics will be managed.

- key suppliers, such as hardware firms, software developers, and service providers, involved in the design of the process

- service metrics are analyzed for trends

- process metrics are analyzed for trends

- the process is integrated with other processes and tools

- the process is streamlined by automating actions

- the staff are cross-trained on the process, and how is the effectiveness of the training verified

- the quality and value of process documentation, its' measurement and maintenance

Schiesser, in his book IT Systems Management, provides a scoring method for an organization to employ to benchmark its' processes - the degree to which each of these characteristics is put to use in designing and managing a process is a good measure of its' relative robustness."[Note]. The relative degree to which the characteristics within each of the ten criteria is present in the organization is rated on a scale of 1 to 4

N. Although the categories are the same for all IT process areas, the exact nature of the characteristics within each criteria will vary from one process to another. The table below highlights this variation.

| "In my experience, many infrastructures do not attribute the same amount of importance to each of the 10 categories within a process, just as they do not all associate the same degree of value to each of the 12 systems management processes. I refined the assessment worksheet to account for this uneven distribution of category significance - I allowed weights to be assigned to each of the categories. The weights range from 1 for least important to 5 for most important, with a default of 3. " Rich Schiesser, IT Systems Management, p. 123 |

The following table presents Schiesser's default weighting for his process areas which have ITIL equivalents.

| Process

| 1

| 2

| 3

| 4

| 5

| 6

| 7

| 8

| 9

| 10

| Total

|

| Availability

| 3

| 3

| 5

| 5

| 5

| 1

| 3

| 1

| 3

| 1

| 30

|

| Performance & Tuning

| 1

| 3

| 3

| 5

| 3

| 5

| 3

| 3

| 1

| 1

| 28

|

| Production Acceptance

| 5

| 3

| 5

| 1

| 3

| 3

| 1

| 3

| 5

| 3

| 28

|

| Change Management

| 5

| 5

| 3

| 1

| 3

| 3

| 3

| 3

| 5

| 3

| 32

|

| Problem (Incident) Management

| 5

| 5

| 1

| 5

| 3

| 3

| 3

| 3

| 5

| 1

| 34

|

| Configuration Management

| 1

| 5

| 3

| 1

| 3

| 1

| 3

| 5

| 3

| 5

| 30

|

| Capacity Planning

| 5

| 5

| 5

| 3

| 3

| 3

| 1

| 1

| 3

| 3

| 32

|

| Strategic Security

| 5

| 3

| 3

| 3

| 5

| 3

| 1

| 1

| 3

| 3

| 30

|

| Disaster Recovery

| 5

| 3

| 5

| 3

| 3

| 3

| 1

| 1

| 5

| 3

| 32

|

The fact that the total weights vary depending upon the process area demonstrates the relative sensitivity of the different process areas to Schiesser's characteristics.

Schiesser avoids suggesting that his assessment scheme allows an organization to compare itself with other organizations. Rather, it provides an internal measure of progress over time against his set of Key Performance Indicators.

| "Apart from its obvious value of quantifying areas of strength and weakness for a given process, this rating provides two other significant benefits to an infrastructure. One is that it serves as a baseline benchmark from which future process refinements can be quantitatively measured and compared. The second is that the score of this particular process can be compared to those of other infrastructure processes to determine which ones need most attention. " Rich Schiesser, IT Systems Management, p. 123 |

![[To top of Page]](../images/up.gif)

Standard CMMI Appraisal Method for Process Improvement (SCAMPI)

The SCAMPI method was developed over several years in parallel with the CMMI. It is part of the CMMI product suite, which includes models, appraisal materials, and training materials. SCAMPI V1.1 enables a sponsor to:

- gain insight into an organization’s engineering capability by identifying the strengths and weaknesses of its current processes

- relate these strengths and weaknesses to the CMMI model

- prioritize improvement plans

- focus on improvements (correct weaknesses that generate risks) that are most beneficial to the organization given its current level of organizational maturity or process capabilities

- derive capability level ratings as well as a maturity level rating

- identify development/acquisition risks relative to capability/maturity determinations. The methodology has three distinct phases.

[Ref]

SCAMPI V1.1, as a benchmarking appraisal method, relies upon an aggregation of evidence

that is collected via instruments, presentations, documents, and interviews. These four

sources of data feed an “information-processing engine” whose parts are made up of a series

of data transformations. An appraisal team observes, hears, and reads information that is

transcribed into notes, and then into statements of practice implementation gaps or

strengths (where appropriate), and then into preliminary findings. These are validated by the

organizational unit before they become final findings.

| The critical concept is that these transformations

are applied to data reflecting the enacted processes in the organizational unit and

the CMMI reference model, and this forms the basis for ratings and other appraisal results. Standard CMMI, Appraisal Method for Process Improvement (SCAMPI), Version 1.1: Method Definition Document, December 2001, p. 25

|

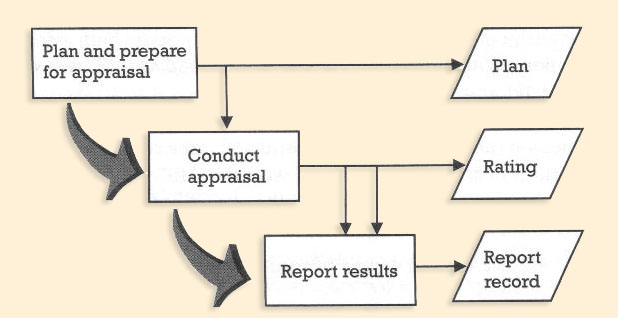

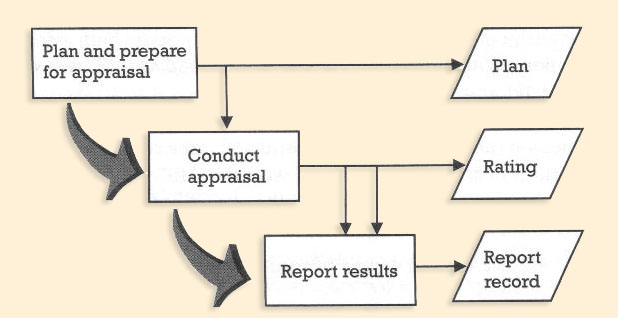

SCAMPI phases

| Phase | Process

|

| 1 Plan and prepare for appraisal. |

- Analyze requirements.

- Develop appraisal plan.

- Select and prepare team.

- Obtain and analyze initial objective evidence.

|

| 2 Conduct appraisal. |

- Examine objective evidence.

- Verify and validate objective evidence.

- Document objective evidence.

- Generate appraisal results.

|

| 3 Report results. |

- Deliver appraisal results.

- Package and archive appraisal results.

|

To be consistent, repeatable, and accurate, the SCAMPI method requires collection and analysis of objective evidence from various sources, such as questionnaires, presentations, documents, and interviews. Typically, a team of trained professionals, led by an authorized lead appraiser, examines this objective evidence, ensures its validity, and makes observations about its compliance with the CMMI. The observations are then transformed into statements strengths and weaknesses, and finally into findings that are presented to the appraisal sponsor. The ratings resulting from the validated observations and findings indicate how well the enacted processes reflect the CMMI model requirements.

In the CMMI models, specific and generic goals are required model components, whereas specific and generic practices are expected model components. This means that the goals are used for rating purposes, while practices are used as a guide to evaluating their satisfaction. Because of the goal-practice relationship, goals can be satisfied only if the associated practices are implemented. Therefore, the extent of practice implementation is investigated to determine if the goals and, in turn, the Process Areas are satisfied. The objective evidence collected, examined, and analyzed is the basis for judgment of component satisfaction. Although the objective evidence associated with each individual practice is examined, the entire model and its intent are needed to reach decisions about the extent to which those practices are or are not implemented. The appraisal team keeps notes, develops observations, and characterizes the evidence as indicating strengths or weaknesses.

SCAMPI Appraisal Artifacts

To support that evaluation, SCAMPI Introduces the Practice Implementation Indicator (PII). The PII is an objective attribute or characteristic used to verify the conduct of an activity or implementation of a CMMI practice. There are three types of PIIs:

- Direct artifacts are outputs resulting from implementation of the process.

- Indirect artifacts arise as consequences of performing a practice or substantiate its implementation.

- Affirmations are oral or written statements confirming or supporting implementation of a practice.

Items listed as "typical work products" in the CMMI documents are representative of direct and indirect artifacts. Meeting minutes, review results, and status reports are also indirect artifacts. Affirmations are obtained from interviews or through questionnaire responses. Each type of objective evidence must be collected for every practice in order to corroborate the practice implementation.

PIIs are verified throughout the appraisal process until there is sufficient objective evidence to characterize the implementation of a practice. For practice implementation evidence to be declared sufficient, it has to contain direct artifacts corroborated by either indirect artifacts or affirmations.

Access SCAMPII appraisal artifacts

[From the list-box in top right-hand corner of screen.]

PII's are rated as:

- FI - direct artifacts are present and are appropriate, at least one indirect artifact or affirmation is noted, and no substantial weaknesses are noted

- LI allows for one or more weaknesses to be noted

- PI the direct artifact is absent or inadequate or that practice may not be fully implemented, and that there are documented weaknesses

- NI - Non existent

The appraisal outputs are the findings, statements of strengths and weaknesses identified, and an appraisal disclosure statement that precisely identifies the following:

- Organizational unit;

- Model selected;

- PAs appraised;

- Specific and generic goal ratings;

- Maturity or capability levels ratings.

![[To top of Page]](../images/up.gif)

CobIT

Increasingly, business practice involves the full empowerment of business

process owners so they have total responsibility for all

aspects of the business process - including IT processes. By extension, this includes

providing adequate controls. The COBIT Framework provides a tool for the business

process owner to discharge this responsibility.

| "In order to provide the information that the organization

needs to achieve its objectives, IT resources need to be

managed by a set of naturally grouped processes." COBIT’s Management Guidelines |

In order to provide the information that the organization needs to achieve its objectives, IT resources should be

managed through process groupings. The CobIT Framework describes a set of 34 high-level Control Objectives, one for each of the IT processes, grouped into

four domains:

- planning and organization,

- acquisition and implementation,

- delivery and support, and

- monitoring.

CobIT contends that this structure "covers all aspects of information and the technology that supports it"

[Note]. By addressing these 34 high-level control objectives, the business process owner can ensure that an adequate control system is provided for the IT environment.

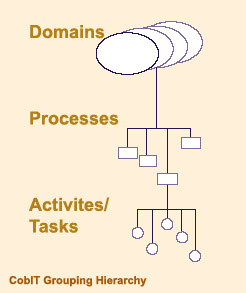

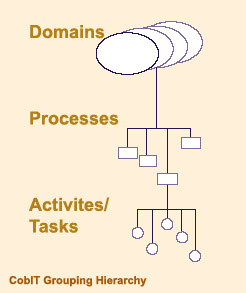

The CobIT Framework consists of high-level control

objectives and an overall structure for their classification.

The underlying theory for the classification is that

there are, in essence, three levels of IT efforts when considering

the management of IT resources. Starting at the

bottom, there are the activities and tasks needed to

achieve a measurable result. Activities have a life-cycle

concept while tasks are more discrete. The life-cycle

concept has typical control requirements different from

discrete activities. Processes are then defined one layer

up as a series of joined activities or tasks with natural

(control) breaks. At the highest level, processes are naturally

grouped together into domains. Their natural

grouping is often confirmed as responsibility domains in

an organizational structure and is in line with the management

cycle or life cycle applicable to IT processes.

|

|

CRITICAL SUCCESS FACTORS (CSF) define the most important issues or actions for management to achieve control over and within its IT processes. Without these conditions/factors the organization will have difficulty in meeting business objectives (as defined by KPIs). Therefore, the presence or absence of CSFs can be used to define the maturity of the organization - they provide a way for the organization to self-evaluate. The approach was derived from CMM and reflects CMMI methodology.

Against these levels, developed for each of COBIT’s 34 IT processes, management can map:

- The current status of the organization — where the organization is today

- The current status of (best-in-class in) the industry — the comparison

- The current status of international standards — additional comparison

- The organization's strategy for improvement — where the organization wants to be

The generic descriptions for each level of the Maturity model are

[Note].

- 0 - Non-Existent. Complete lack of any recognizable processes. The organization has not even recognized that there is an issue to be addressed.

- 1 - Initial. There is evidence that the organization has recognized that the issues exist and need to be addressed. There are however no standardized processes but instead there are ad hoc approaches that tend to be applied on an individual or case by case basis. The overall approach to management is disorganized.

- 2 - Repeatable. Processes have developed to the stage where similar procedures are followed by different people undertaking the same task. There is no formal training or communication of standard procedures and responsibility is left to the individual. There is a high degree of reliance on the knowledge of individuals and therefore errors are likely.

- 3 - Defined. Procedures have been standardized and documented, and communicated through training. It is however left to

the individual to follow these processes, and it is unlikely that deviations will be detected. The procedures themselves are not sophisticated but are the formalization of existing practices.

- 4 - Managed. It is possible to monitor and measure compliance with procedures and to take action where processes appear not to be working effectively. Processes are under constant improvement and provide good practice. Automation and tools are used in a limited or fragmented way.

- 5 - Optimized. Processes have been refined to a level of best practice, based on the results of continuous improvement and maturity modeling with other organizations. IT is used in an integrated way to automate the workflow, providing tools to improve quality and effectiveness, making the enterprise quick to adapt.

![[To top of Page]](../images/up.gif)

Help Desk Institute

|

The HelpDesk Institute publication - "Implementing Service and Support Management Processes" distinguishes key service management processes according to their stage within the organization.

N

- implementation processes,

- ongoing operational processes, and,

- optimization processes

Each process section contains a section on Measurement, Costing and management Reporting under each of these three process stages. The cited deliverables provide valuable information for assessing process robustness within the organization.

[Note]

|

| Process | Implementation | Operations | Optimization

|

| Configuration Management

| - Before the start of the project, providing any policies, process or procedures currently in use by the Service Center to manage the inventory and configuration of the services and systems

they support

- Business and technical requirements for the development of the new policies, process,

procedures and the Configuration Management tool, respectively

- List of inventories managed by the Service Center before start of project

- List of inventories to be managed for the implementation of the project and ongoing

operations

- List of end-users and their profiles along with their hardware, software and license usage

- Requirements for training of Support Center staff for the use of the new process and tool

- reports that classify incidents by CI class and CI type

- reports of incidents caused by wrongly made changes

- undocumented information or knowledge about various CI relationships accumulated

Support Centre’s staff over time as they learn from their troubleshooting experiences

| - Training plan for staff

- Action plan to address discrepancies found in audit reports

- Configuration management plan for CIs under the control Service

- Recommendations for continuous process improvements

| - Continuous improvement plans

- New business and functional requirements for tool enhancements

- Reports to justify technology enhancement or new technology investments

- IT strategy plan that aligns with and supports the business plan

|

| Change Management

|

- Evaluate the current state

- Create mission/purpose statement

- Define the `to be' process (include emergency change process).

- Establish a Change Management policy.

- Determine the change review process.

- Create Change Request Submission Process and forms

- Define the change approval process.

- Define the post implementation review process.

- Create and communicate a Forward Schedule of Change and a Change Calendar.

- Automate the process, sub-processes, and procedures.

| - Change processes are monitored and measured

- Change Calendar available

- Forward Schedule of changes

- Change Management process is communicated and accepted by all stakeholders

|

- Management by fact

- Controls are visible, verifiable and regularly reported

- Culture of causality

- Organizational learning through history of successful change management

- Accurate and complete CMDB repository

- Volatile components with stable infrastructure

- Detective controls in place on infrastructure

|

| Release Management

| - Requirements checklist

- Communicate to Support Center team

- Formal request to Release Management for reporting and management information requirements

- Business considerations- month end, holiday, end-users informed, educated etc, planning in conjunction with the activities of the business or with IT activities: business considerations or IT considerations

- Policy for Standard Requests

| - Updates to requirements as needs change

- Feedback to release management on call volumes, issues

- Participation in Project Implementation Review meetings

- Provides estimate for the cost of supporting the new service

| - Review and update the Requirements Document as required and provide to Release Management

- Prepare and distribute management information to Release Management, support areas, and other interested parties regarding roll-out statistics (include charts/graphs as required)

- Project plan to train staff for next major roll-out

- Budgeting for staffing levels are at required levels

- Tools are sufficient and well authored

- Provide feedback to Release Management and/or project team

- Identify weak links - determine action to remedy

|

| Incident Management

| - Blueprint for how Incident Management will function within the Support Center.

- Current State Assessment

- Gap Analysis

- work instructions

- job aids

- tool and core process training materials

| - Monitoring the status and progress toward resolution of all open incidents

- Keeping affected users informed about progress

- Escalating processes as necessary.

| - Metrics around such items as trends and workloads provide management with the information they need in order to justify budgets for additional resource needs such as additional staff, tools, etc.

- outsourcing options considered based on requirements

|

| Problem Management

| - Notification Process Flowcharts

- Escalation Process Flowcharts

- Feedback Process Flowcharts

- Population of Knowledgebase, Process and Procedure

| - Management reports, and associated analysis, required for the management of the Problem Management process

- Communication to other processes and functional managers, as well as to suppliers, users and customers

- A `top 10' list of problems, including what has been done so far to deal with them, and what will be done next, is highly recommended

| - Updated OLA (internal)

- Updated SLA (external)

- Processes

- Training plans

|

| Service Level Management

| - Creates and maintains a catalog of existing services offered by the IT department

- Formulates, agrees and maintains an appropriate SLM structure for the organization, to include

- Operational Level Agreements (OLAs) within the IT provider organization

- Service Level Agreement (SLA) structure (e.g. service based, customer based or multi-level)

- Third Party Supplier/Contract Management relationships to the SLM process

- Accommodates any existing Service Improvement Plans/Programs within the SLM process

- Negotiates, agrees and maintains the Service Level Agreements with the customer

- Negotiates, agrees and maintains the Operational Level Agreements with the IT provider

- Negotiates and agrees with both the customer and IT provider any Service Level Requirements for any proposed new/developing services

- Analyzes and reviews service performance against the SLA and OLA

- Produces regular compliance reports on service performance and achievement to the customer as well as the IT support groups at an appropriate level

| Not Addressed

| Not Addressed

|

| Capacity & Workforce Management

| - software budget

- process by which the software was selected

- list of software requirements

- analysis of the vendor and/or in-house offerings that were evaluated

- software implementation plan

- Capacity plan

- recommendations to Senior Management for Service Desk investment

- improved staff skills and training

- planned buying, rather than panic buying, for Service Desk resources

- smoother implementation of new systems and services

- managed head count on the Service Desk.

| New forecasts and schedules

| - Adjusted forecasts and schedules

- Training plans

- Recruiting plans and budgets

|

| Availability Management

| - SLA(s)

- SLRs

- Service plans for the education of the Availability Management function

- Identification of ways in which availability can be improved

- Availability Management plan

| - Management reports and updates, documentation, and a continually updated database

| - The demarcation lines between SCM and Availability Management must be clear

- nothing should be reactive to events.

- All contracts must be robust in terms of serviceability, reliability and maintainability clauses.

- The tools infrastructure must be unimpeachable in its ability to monitor and flag issues in advance of problems.

|

| IT Service Continuity Management

|

| - Testing schedule and objectives

- Input to post test review meeting and report

- Support Center continuity plan awareness materials

- Input to induction training materials

- Input to change request impact assessments

| - Updated contact details

- Updated procedural documentation

|

| Customer Satisfaction

| - Survey instrument design

- Sampling plans

- Spontaneous feedback management process

- Incident based feedback process

- Tool selection

- Training staff for handling spontaneous feedback

- Model reports

| - Spontaneous feedback report

- Incident based measurement report

| - Process design improvements

- Process management

|

Assessment Framework Problems & Issues

ITIL V3 cites several drawbacks associated with assessments:

- An assessment provides only a snapshot in time of the process environment. As such it does not reflect current business or cultural dynamics and process operational issues.

- If the decision is to outsource the assessment process, the assessment and maturity framework can be vendor or framework dependent. The proprietary nature of vendor-generated models may make it difficult to compare to industry standards.

- The assessment can become an end in itself rather than the means to an end. Rather than focusing on improving the efficiency and effectiveness of processes through process improvement, organizations can adopt a mindset of improving process for the sake of achieving maturity targets.

- Assessments are labour-intensive efforts. Resources are needed to conduct the assessments in addition to those responding such as process or tool practitioners, management and others. When preparing for an assessment, an honest estimate of time required from all parties is in order.

- Assessments attempt to be as objective as possible in terms of measurements and assessment factors, but when all is said and done, assessment results are still subject to opinion of assessors. Thus assessments themselves are subjective and the results can have a bias based on the attitudes, experience and approach of the assessors themselves.

ITIL v3, Continuous Service Improvement, p 96

|

In addition to these expressed issues there are specific challenges in the selection of a framework as the target best practices as the benchmark for comparison:

- lack of total agreement and standardization on an assessment framework - though CobIT is increasingly becoming accepted amongst the audit community and is increasingly being recognized as a governance framework,

- the selection of process sub-areas and any weighting attached to them is largely subjective,

- implementing best practices must acknowledge an organizations' maturity capabilities since they define how well it can integrate processes and sustain any improvement gains,

- ITIL process areas, though more exhaustive in version 3 than version 2, remain neither exhaustive of key processes (when compared to CobIT), nor interdependent amongst each other. They interact in multiple and often unique ways. Some sub-activities may even be pre-conditions for others.

![[To top of Page]](../images/up.gif)

Example Self-Assessment with Maturity Level Valuation

An Assessment model than recognizes that IT Service Management process and sub-processes should be targeted to specific maturity levels provides a more meaningful blueprint. CMMI provides the base theoretical underpinning for this. It includes key sub-activities and Critical Success Factors for each process area to provide a more granular and meaningful depiction of an organization's current processes and improvement over time.

The following table presents what this might look like for an assessment of an organization's Change Management process. It describes what Change management sub-processes would resemble at different levels of maturity.

Change Management

| Activity | Process Questions Geared to Maturity Levels

|

| Process Interfaces

|

Risk Management

- Are change requests reviewed by someone for their potential impact on the infrastructure ?

- Is there one or more specific role(s) identified which have overall responsibility for assessing the risks of change(s) into the production environment ?

- Is there a standard methodology or set of agreed-upon practices for risk assessment in the organization ?

- Are changes assigned a relative risk 'score' based upon established risk assignment criteria ?

- Are changes assigned a risk 'score' which can be evaluated following implementation to determine if they occurred improve the methodology for the assignment of risk ?

Configuration Management

- Are components of the live environment that might be affected by a Change identified?

- Are components of the live environment that might be affected by a Change identified through a history of past Changes that have affected them?

- Is there a data source describing the interdependencies of infrastructure components which is referenced to assess the risks associated with a change ?

- Is the success or failure of a change analyzed against a database describing how infrastructure elements relate to each other ?

- Have models been developed relating change success to such identifiers as Mean Time Between Failure (MTBF) of infrastructure components ?

Validation and Testing

- Is a Build Book or some other set of procedures created to direct implementation of changes ?

- Are major changes tested in a test environment before implementation ?

- Does an independent team test all changes in a pre-production environment prior to implementation ?

- Is there a set of standard test procedures which are used to test implementations ?

- Are test procedures assessed for continued quality following review of the change implementation ?

|

| Risk Management

|

- Are contingency actions prepared which can be invoked should an change implementation appear to cause problems ?

- Are back out plans tested for every change ?

- Are there standard back-out plans which can be invoked quickly and which have been approved by Change Management ?

- Are back-out plans periodically reviewed for continued relevance in light of new technologies and approaches ?

- Is the success of back-out plans measured and are modifications made ot the plans on the basis of the success or failure ?

|

| Change Calendar

|

- Is a log of scheduled changes maintained ?

- Are changes scheduled to ensure that the risk of a change does not affect another scheduled change ?

- Is there a single, consolidated schedule of all upcoming changes to be implemented into the production environment ?

- Are infrastructure components involved in a scheduled change cross correlated with other scheduled changes to uncover possible interdependencies in the schedule ?

- Are past changes analyzed to assess scheduling risks in order to develop policies to avoid conflicts in scheduling in the future ?

|

| Filing Request for Change

|

- Does a description of the change accompany the scheduling of a change ?

- Are there one or more specific forms used with common entries relating to a change ?

- Is Change information entered into a single, consolidated data source which permits easy access by all stakeholders ?

- Can you reliably categorize changes according to the area of infrastructure affected ?

- Do you regularly review change data to discover patterns and causal determinants of infrastructure incidents which may be related to changes ?

- Is there provision for interested parties to comment on a requested change ?

- Is there a perceived obligation on the part of a Change initiator or other persons involved in the change process to actively obtain comment on a change from affected parties ?

- Is there a formalized process (eg., Change Advisory Board(s)) to ensure stakeholder review and sign-off of a change prior to its' introduction to the production environment ?

- Is there follow-up to assess whether the review of changes is adequate for the kind of change implemented ?

- Is there a periodic evaluation of the relative value of soliciting stakeholder comment and involvement in the change process ?

- Are change requests sometimes referred back to the originator because the information provided on the change is not sufficient ?

- Does someone assess the provision of key information on a change and return a change request when that information is not provided ?

- Do all changes follow an approval process which prevents them from being implemented until key information describing the change is provided ?

- Are there specific guidelines describing what constitutes an absence of key information which would require that a change request be returned to an originator for further action prior to implementing a change ?

- Is an evaluation of the quality of information pertaining to a change carried out ?

|

| Change Prioritization

|

- Is there provision for expediting the implementation of changes considered 'Urgent' ?

- Are changes prioritized based upon a common set of criteria ?

- Are some changes considered 'pre-authorized' and follow expedited processes for implementation ?

- Are change requests regularly evaluated to see if they might benefit from establishing or using existing 'Change Templates' which describe commonly employed and tested procedures ?

- Are 'Change Templates' periodically reviewed for continuing consistency and amended when a particular change might indicate that the Template could be improved by modifying it ?

- Is there an identified source which approves changes ?

- Is there a class of changes which can be approved by a specific role identified as having responsibility for corporate-wide changes ?

- Does the organization have a corporate 'Change Manager' responsible for approving certain kinds of changes and for routing other classes of change to the appropriate bodies ?

- Is the performance of the corporate Change Manager assessed against established Key Performance Indicators ?

- Are recommendations for improvement in the sphere of authority of the Change Manager periodically advanced ?

- Are there changes which are approved and implemented by local bodies without reference to a central change approval body ?

- Are there local changes which are implemented by defined local sources with specific knowledge of that area and which have a specific mandate to do so by a corporate change authority ?

- Are there 'Local Change Boards' with mandated responsibitilies for reviewing changes within defined sectors of the infrastructure ?

- Is the success of 'Local Change Boards' measured against Key Performance Indictors established for them ?

- Are Local Change Boards reviewed periodically for 'best practices' which can be extended and or emulated throughout the organization ?

- Are high volume but low risk changes excluded from formal change procedures ?

- Is there a definition of the kinds of changes which are excluded from formal Change Management procedures ?

- Have low risk, high volume changes been identified as 'standard' changes and which are subject to expedited procedures which were formally approved by Change Management procedures ?

- Are deviations from established 'standard' procedures identified and required to be processed through regular Change Management processes ?

- Are deviations from 'standard' processes reviewed and reflected in changes to the 'standard' procedure where necessary and appropriate ?

|

| Post-Implementation Change Review

|

- Are changes reviewed after implementation to ensure that information pertaining to the change was complete ?

- When incident(s) occur as a result of a change is there a review initiated to assess the change ?

- After implementation of each major change is a review conducted with participants and stakeholders to assess 'lessons learned' ?

- Is the Change implementation explicitly compared against best practices descriptions ?

- Are deviations from best practices explained or intrgated into an improved Change Management process description ?

|

| Process Management

|

- Are methods of implementing a change emulated from one change to the next wherever possible ?

- Is there an agreed upon method for introducing changes ?

- Is there a process description for Change Management?

- Are Change Management policies and procedures used to modify Change Management process descriptions ?

- Are Change Management processes evaluated against a set of objectives and annually reviewed for effectiveness and to assess return on investment (ROI) to the organization ?

- Do changes usually follow established patterns ?

- Are there descriptions or processes for aspects of a change ?

- Do all changes conform to a developed process description for Change Management ?

- Is there a periodic review of the degree to which Changes conform with or deviate from established guidelines and processes?

- Are deviations from established procedures reviewed for cause and, where appropriate, result in recommendations for changes to the process ?

|

| Process Responsibilities

|

- Is there someone responsible for coordinating the scheduling of changes ?

- Is there a person responsible for the entire Change Management process ?

- Are those performing Change Management aware of their roles and responsibilities ?

- Are change management functional responsibilities reflected in job descriptions ?

- Are functional responsibilities reviewed annually to reflect annual performance reviews and change management role modifications ?

|

| Process Outcomes

|

- Do you reference incidents which led to a change as part of the change request process ?

- Do you have commitments with customers which govern how and how quickly you complete a change ?

- Can you definitively determined which items in the infrastructure will be affected by a change ?

- Can incidents be cross-referenced against changes which may may have caused them ?

- Does Availability Management track the impact of changes on overall Availability ?

|

| Performance Measurement

|

- Are periodic assessments of how well Change management is performing conducted ?

- Are post-implementation of significant changes conducted and 'lessons learned' recorded and recommendation advanced for improvement ?

- Have Key Performance Indicators been devised for Change Management ?

- Are Changes quantitatively assessed against Change Management Key Perfromance Indicators ?

- Are deficiencies in Change Manage processes empirically reviewed for root cause and remedial actions recommended ?

- Is data on Changes collected ?

- Is data collected on the kind of changes implemented, approval source and status', length of time to implement and amount and kind of testing conducted ?

- Is information on the configuration items which may be impacted by a change associated with the change record ?

- Is the time required for stages of the Change lifecycle logged such as 'requesting', 'testing', 'implementing' /

- Are resources used in the implementation of the change accounted for so that a 'Total Cost of Change' can be determined ?

- Are Changes assessed for their success or failure in implementation ?

- Is a post-implementation review of major chances conducted to determine lessons learned and record lessons for future evaluation ?

- Are changes matched against incidents caused by poor implementations ?

- Are the success of Changes assessed against Key Performance Indicators ?

- Are statistics of Changes maintained and is this data used to evaluate change costs and success ratios ?

.

|

The interviewee would be asked to indicate which of the five items best reflected the implementation of that aspect of Change Management (including enabling process activities). Since the score reflects increasing maturity the selection reflects the score for that question. Summing the score (similar to Schiesser's treatment) results in an overall measure for the process area.

![[To top of Page]](../images/up.gif)